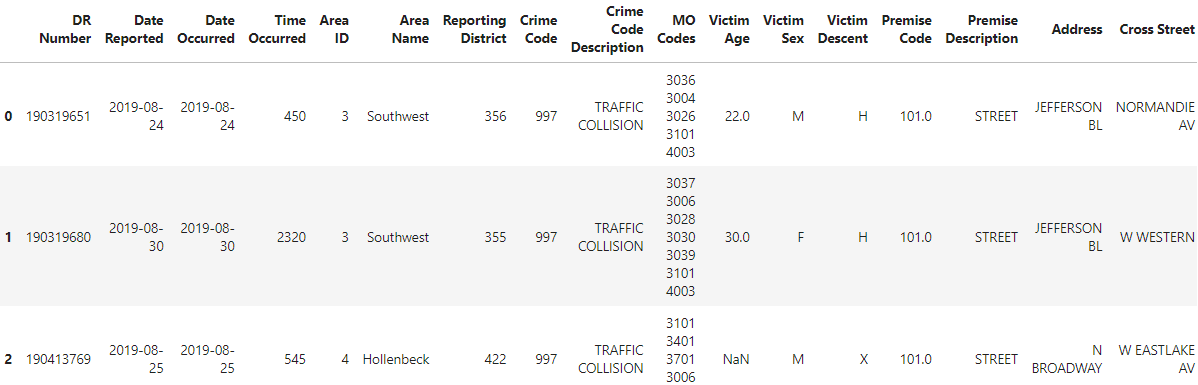

Time series analysis on LA traffic collision data

One of the leading causes of injuries and fatalities globally is traffic accidents. Consequently, they make up a substantial area of research. A number of factors increase the likelihood of crashes including weather, road design, vehicle design, alcohol use and aggressive driving. In addition to these considerations, there can be some underlying patterns in the time of collision. A time series analysis can be used to uncover this underlying pattern. The purpose of this project is to predict the number of collisions using the time series analysis.